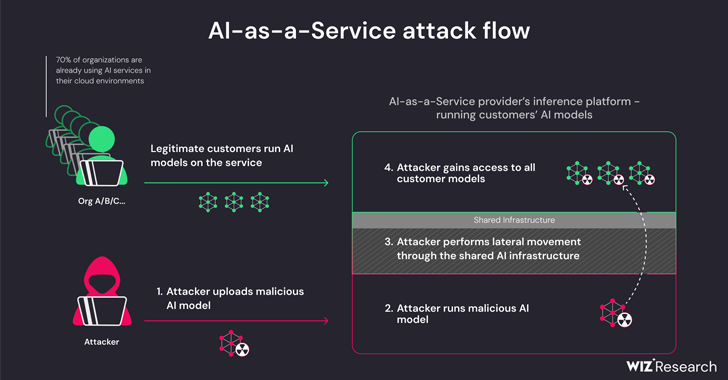

New research has found that artificial intelligence (AI)-as-a-service providers like Hugging Face are susceptible to two critical risks that could allow threat actors to escalate privileges, gain cross-tenant access to other customers’ models, and even take over continuous integration and continuous deployment (CI/CD) pipeline.

“Malicious models pose a serious risk to AI systems, especially AI-as-a-service providers, because potential attackers could exploit these models to carry out cross-tenant attacks,” researchers at Wiz Shir Tamari and Sagi Tzadik.

“The potential impact is devastating, as attackers may be able to access millions of private AI models and apps stored within AI-as-a-service providers.”

The development comes as machine learning pipelines have emerged as a brand new supply chain attack vector, with repositories like Hugging Face becoming an attractive target to mount adversary attacks designed to collect sensitive information and access target environments.

The threats are two-pronged and arise from shared inference infrastructure acquisition and shared CI/CD acquisition. They allow you to run untrusted models uploaded to the service in pickle format and take control of the CI/CD pipeline to perform a supply chain attack.

The cloud security firm’s findings show that it is possible to hack the service that runs the custom models by loading a rogue model and using container escape techniques to break out of your tenant and compromise the entire service, effectively allowing attackers threats to gain cross-information tenant access to other customers’ models stored and executed in Hugging Face.

“Hugging Face will still allow the user to infer the Pickle-based model loaded on the platform infrastructure, even if it is deemed dangerous,” the researchers explained.

This essentially allows an attacker to create a PyTorch (Pickle) model with the ability to execute arbitrary code on load and chain it with misconfigurations in Amazon Elastic Kubernetes Service (EKS) to gain elevated privileges and move laterally within the cluster.

“The secrets we obtained could have had a significant impact on the platform if they had been in the hands of an attacker,” the researchers said. “Secrets within shared environments can often lead to cross-tenant access and leakage of sensitive data.

To mitigate this issue, we recommend enabling IMDSv2 with Hop Limit to prevent pods from accessing the Instance Metadata Service (IMDS) and gaining the node role within the cluster.

The research also found that it is possible to execute code remotely via a specially crafted Dockerfile while running an application on the Hugging Face Spaces service and use it to extract and push (i.e. overwrite) all available images on an internal container . register.

Hugging Face, in a coordinated briefing, said it had addressed all issues identified. It also encourages users to use templates only from trusted sources, enable multi-factor authentication (MFA), and refrain from using pickle files in production environments.

“This research demonstrates that using untrustworthy AI models (particularly those based on Pickle) could result in serious security consequences,” the researchers said. “Additionally, if you intend to allow users to use untrusted AI models in your environment, it is extremely important to ensure that they work in a sandbox environment.”

The disclosure follows other research from Lasso Security that found it is possible for generative AI models like OpenAI ChatGPT and Google Gemini to distribute malicious (and non-existent) code packages to unsuspecting software developers.

In other words, the idea is to find a recommendation for an unpublished package and publish a Trojan-containing package in its place to propagate the malware. The phenomenon of AI packet hallucinations highlights the need for caution when relying on large language models (LLMs) for coding solutions.

AI company Anthropic, for its part, has also detailed a new method called “multi-shot jailbreaking” that can be used to bypass security protections built into LLMs to produce responses to potentially malicious queries by exploiting the context window of models.

“The ability to input ever-increasing amounts of information has clear benefits for LLM users, but it also comes with risks: vulnerabilities to jailbreaks that exploit the longer context window,” the company said earlier this week.

The technique, simply put, involves introducing a large number of fake dialogues between a human and an AI assistant within a single prompt for LLM in an attempt to “steer the model’s behavior” and answer questions that otherwise he wouldn’t have (e.g., “How do I build a bomb?”).