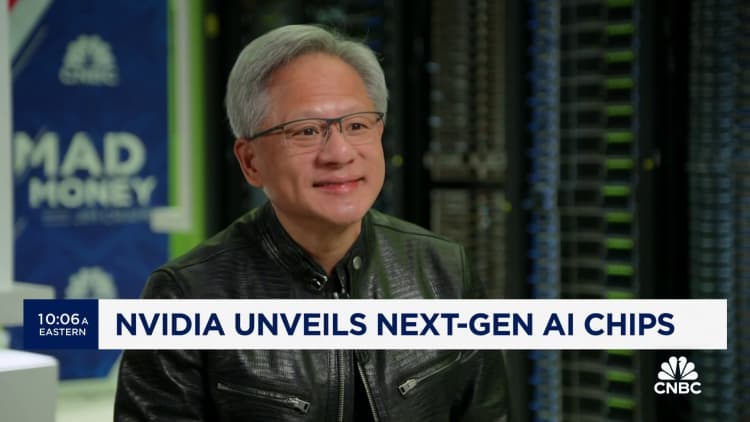

Nvidia’s The next-generation graphics processor for artificial intelligence, called Blackwell, will cost between $30,000 and $40,000 per unit, CEO Jensen Huang told CNBC’s Jim Cramer.

“It will cost us 30 to 40 thousand dollars,” Huang said, showing off the Blackwell chip.

“We had to invent new technology to make this possible,” he continued, estimating that Nvidia spent about $10 billion in research and development costs.

The pricing suggests that the chip, which is likely to be in high demand for training and deploying AI software like ChatGPT, will be priced similarly to its predecessor, the H100, or the “Hopper” generation, which it costs between $25,000 and $25,000. $40,000 per chip, according to analyst estimates. The Hopper generation, introduced in 2022, represented a significant price increase for Nvidia’s AI chips compared to the previous generation.

Nvidia CEO Jensen Huang compares the dimensions of the new “Blackwell” chip with the current H100 “Hopper” chip at the company’s developer conference, in San Jose, California.

Nvidia

Nvidia announces a new generation of AI chips about every two years. The latter, like Blackwell, are generally faster and more power efficient, and Nvidia uses the hype around a new generation to drum up orders for new GPUs. Blackwell combines two chips and is physically larger than the previous generation.

Nvidia’s AI chips have tripled Nvidia’s quarterly sales since the AI boom began in late 2022, when OpenAI’s ChatGPT was announced. Most major AI companies and developers have used Nvidia’s H100 to train their AI models over the past year. For example, Half is buying hundreds of thousands of Nvidia H100 GPUs, it said this year.

Nvidia does not reveal the list price of its chips. They are available in different configurations and the price is an end consumer such as Meta or Microsoft might pay depends on factors such as the volume of chips purchased or whether the customer buys chips from Nvidia directly through a complete system or through a supplier such as Dell, HPor Supermicro building AI servers. Some servers are built with up to eight AI GPUs.

Nvidia announced at least three different versions of its Blackwell AI accelerator on Monday: a B100, a B200, and a GB200 that pairs two Blackwell GPUs with an Arm-based CPU. They have slightly different memory configurations and are expected to ship later this year.