Cybersecurity researchers have discovered that third-party plugins available for OpenAI ChatGPT could serve as a new attack surface for threat actors looking to gain unauthorized access to sensitive data.

Security flaws found directly in ChatGPT and within the ecosystem could allow attackers to install malicious plugins without users’ consent and hijack accounts on third-party websites like GitHub, according to new research published by Salt Labs.

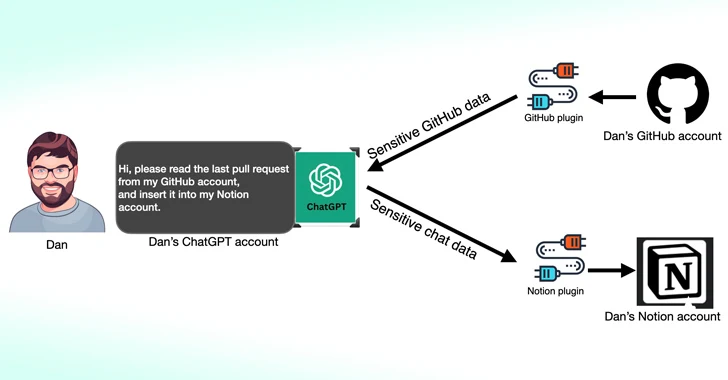

ChatGPT plugins, as the name suggests, are tools designed to work on the Large Language Model (LLM) with the goal of accessing up-to-date information, performing calculations, or accessing third-party services.

OpenAI has since also introduced GPTs, which are bespoke versions of ChatGPT tailored for specific use cases, while reducing dependencies on third-party services. Starting March 19, 2024, ChatGPT users will no longer be able to install new plugins or create new conversations with existing plugins.

One of the flaws discovered by Salt Labs involves exploiting the OAuth workflow to trick a user into installing an arbitrary plugin by exploiting the fact that ChatGPT does not validate that the user actually initiated the installation of the plugin.

This could effectively allow threat actors to intercept and exfiltrate any data shared by the victim, which could contain proprietary information.

The cybersecurity firm also brought to light issues with PluginLab that could be weaponized by threat actors to conduct click-free account takeover attacks, allowing them to gain control of an organization’s account on websites third-party sites like GitHub and access their source code repositories.

“‘auth.pluginlab[.]ai/oauth/authorized’ does not authenticate the request, which means the attacker can enter another memberId (i.e. the victim) and get a code that represents the victim,” explained security researcher Aviad Carmel. “With that code, can use ChatGPT and access the victim’s GitHub.”

The victim’s memberId can be obtained by querying the “auth.pluginlab[.]ai/members/requestMagicEmailCode.” There is no evidence that user data was compromised using the flaw.

An OAuth redirect manipulation bug was also discovered in several plugins, including Kesem AI, which could allow an attacker to steal account credentials associated with the plugin itself by sending a specially crafted link to the victim.

The development comes weeks after Imperva detailed two cross-site scripting (XSS) vulnerabilities in ChatGPT that could be chained together to take control of any account.

In December 2023, security researcher Johann Rehberger demonstrated how malicious actors could create custom GPTs capable of phishing for user credentials and transmitting the stolen data to an external server.

New remote keylogging attack on AI assistants

The findings also follow new research published this week on an LLM side-channel attack that uses token length as a hidden means to extract encrypted responses from AI assistants on the web.

“LLMs generate and send responses as a series of tokens (similar to words), with each token transmitted from the server to the user as it is generated,” said a group of academics from Ben-Gurion University and Offensive AI Research Lab.

“While this process is encrypted, the sequential transmission of tokens exposes a new side channel: the token length side channel. Despite the encryption, the size of the packets can reveal the length of the tokens, potentially allowing network attackers to infer information sensitive and confidential conversations shared in the AI assistant’s private conversations.”

This is achieved through a token inference attack designed to decipher responses in encrypted traffic by training an LLM model capable of translating token-length sequences into their natural language (e.g., plaintext) sentence counterparts.

In other words, the main idea is to intercept chat responses in real time with an LLM provider, use network packet headers to infer the length of each token, extract and parse text segments, and leverage custom LLM to deduce the answer.

Two key prerequisites for carrying out the attack are an AI chat client running in streaming mode and an adversary capable of capturing network traffic between the client and the AI chatbot.

To counter the effectiveness of the side-channel attack, companies developing AI assistants are advised to apply random padding to obscure the actual length of tokens, broadcast tokens in larger groups rather than individually, and send complete responses in one only once, rather than all at once. token-by-token manner.

“Balancing security with usability and performance represents a complex challenge that requires careful consideration,” the researchers concluded.